In an era where digital transformation drives business success, When DevOps Meets MLOps: Building Unified Pipelines is becoming a necessity rather than an option. By integrating DevOps—known for automating software development and deployment—with MLOps—the practice of operationalizing machine learning models—businesses can build resilient, scalable, and automated pipelines that enhance innovation speed and operational stability. This fusion is transforming the way organizations develop, deploy, and maintain intelligent applications that deliver continuous value.

Understanding DevOps and MLOps

To appreciate the value of building unified pipelines, one must first understand the distinct yet complementary domains of DevOps and MLOps.

What is DevOps?

DevOps is a set of cultural philosophies, practices, and tools that increase an organization’s ability to deliver applications and services rapidly and reliably. It revolves around automation of software building, testing, and deployment through Continuous Integration/Continuous Delivery (CI/CD) pipelines. This approach fosters collaboration between development and operations teams, reduces errors, and speeds up release cycles.

What is MLOps?

In contrast, MLOps applies DevOps principles to the unique challenges of machine learning (ML) systems. Unlike traditional software, ML models rely heavily on data quality, require iterative training and testing, and are prone to performance drift over time. MLOps focuses on automating the entire lifecycle of ML models—from data ingestion and training to deployment and continuous monitoring—ensuring models remain accurate, fair, and reliable in production environments.

Why Unified Pipelines Matter

Integrating DevOps and MLOps creates a powerful synergy that drives enterprise efficiency and accelerates AI adoption.

Closing the Gap Between Software and AI Development

Conventionally, DevOps and MLOps team workflows are siloed, hampering collaboration and causing deployment delays. However, unified pipelines enable teams to manage code, data, and models in a consolidated way. This reduces handoff friction, accelerates time-to-market, and improves overall system reliability.

Driving Business Value Faster

Moreover, unified pipelines empower organizations to continuously deliver software features alongside constantly updated ML models. This duality is vital for applications such as personalized recommendations, fraud detection, or predictive maintenance, where rapid adaptation to changing data patterns can mean competitive advantage.

Ensuring Governance and Compliance

Furthermore, merging DevOps and MLOps strengthens governance by treating ML models as first-class citizens within the software supply chain. As a result, organizations can apply robust security and compliance controls, maintain auditability, and proactively manage risks—especially critical where regulatory standards apply.

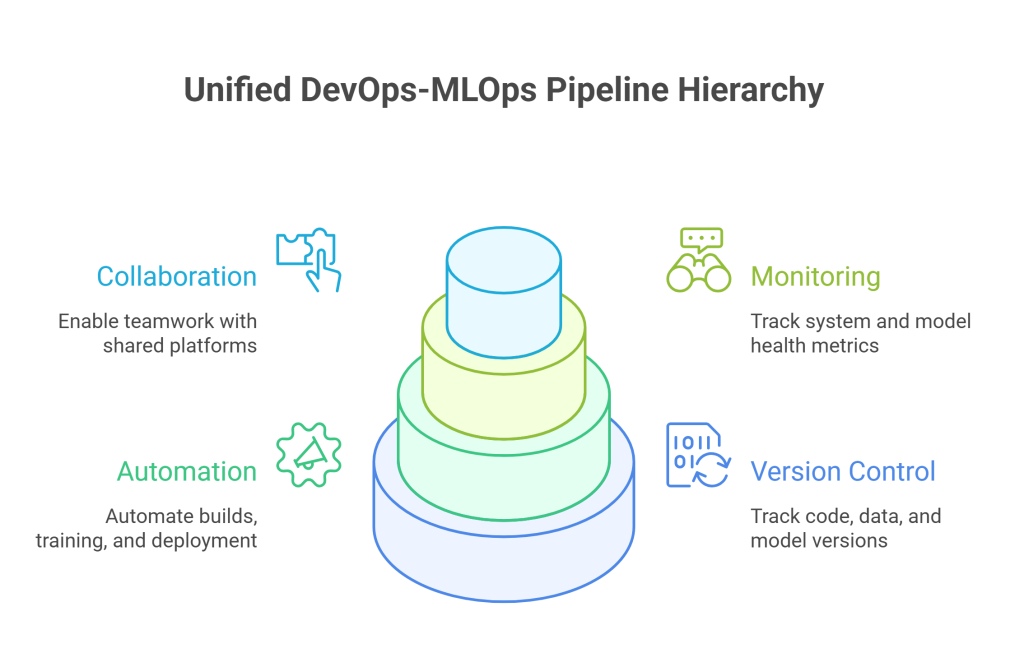

Key Components of Unified DevOps-MLOps Pipelines

Bringing together DevOps and MLOps requires harmonizing various architectural and operational layers.

Version Control for Code, Data, and Models

In addition to standard source code versioning with Git, machine learning pipelines necessitate versioning datasets and trained model artifacts. Tools such as Data Version Control (DVC) or MLflow facilitate tracking these components to ensure reproducibility and consistency across environments.

Automation and Continuous Integration/Delivery

Unified pipelines leverage automation to seamlessly handle software builds, data preprocessing, model training, testing, and deployment. Jenkins, GitLab CI/CD, Kubeflow, or Airflow can orchestrate these workflows, minimizing manual intervention and errors.

Monitoring and Observability

While DevOps teams monitor infrastructure metrics like uptime and latency, MLOps teams track model-specific metrics such as accuracy, drift, and fairness. An integrated monitoring platform visualizes both system and ML health, enabling holistic operational insight.

Shared Collaboration Platforms

Collaboration tools including GitHub or GitLab combined with ML experiment trackers like MLflow, and container orchestration systems like Kubernetes, provide common ground for development and data science teams, enabling smooth teamwork and transparent workflows.

Step-by-Step Guide to Building Unified Pipelines

Creating a successful unified pipeline demands careful planning and iterative development.

Step 1: Cultivate a Cross-Functional Culture

Successful pipeline unification begins with breaking down organizational silos. Encouraging developers, data scientists, and operations engineers to collaborate fosters shared ownership and improves cross-disciplinary understanding.

Step 2: Standardize Processes and Documentation

Defining clear processes for coding, experiment tracking, model validation, and deployments ensures alignment across teams. This shared workflow minimizes confusion and streamlines handoffs.

Step 3: Automate the Full Lifecycle

Implement automation for data ingestion, preprocessing, model training and retraining, validation, deployment, and rollback. Automated triggers—such as new data arrival or model performance degradation—should seamlessly activate retraining pipelines.

Step 4: Adopt Integrated Versioning Tools

Use version control systems capable of managing software code, datasets, and ML models simultaneously. This enables reproducibility and easier rollback when issues arise.

Step 5: Establish Unified Monitoring and Alerting

Set up monitoring that covers system health and ML-specific indicators. Integrate alerts to promptly detect anomalies in infrastructure or model behavior, reducing downtime and mitigating risks.

Challenges in Building Unified Pipelines

Despite the benefits, integrating DevOps and MLOps pipelines comes with its own set of challenges.

Handling Complex Data Workflows

Data quality issues, preprocessing variability, and compliance requirements add layers of complexity absent in traditional software pipelines. Managing this effectively requires advanced orchestration and governance.

Bridging Skill Gaps

Often, DevOps engineers may lack machine learning expertise, and data scientists may be unfamiliar with deployment pipelines and infrastructure management. Cross-training and ongoing education are critical to closing this gap.

Navigating Tooling Diversity

The ecosystem of DevOps and MLOps tools is vast and sometimes fragmented. Selecting tools that integrate well and support the entire unified pipeline demands careful evaluation and strategic planning.

Governance and Security Concerns

Especially in regulated industries, ensuring that pipelines comply with security policies and regulatory mandates around data privacy and model fairness adds additional overhead that must be managed carefully.

Best Practices for Success

To overcome these challenges, organizations should adopt a set of proven best practices.

Start Small and Iterate

Pilot a unified pipeline within a single project before scaling across the enterprise. Iterative development allows learning and adaptation without overwhelming teams.

Align Metrics Across Teams

Develop shared KPIs that encompass both software delivery performance and ML model effectiveness. This fosters alignment on joint business goals.

Leverage Cloud-Native Infrastructure

Cloud platforms like AWS SageMaker, Google Cloud Vertex AI, or Azure ML offer integrated tools that simplify pipeline unification, reducing development overhead.

Prioritize Automation and Repeatability

The more stages you can automate, the less room for human error remains. Automated pipelines also enable consistent, repeatable workflows that enhance trust in deployments.

Case Study: A Global Retailer Unifies DevOps and MLOps Pipelines

A leading international retail company faced significant challenges with delayed ML deployments and outdated models impacting customer experience. Their data scientists built cutting-edge recommendation algorithms, but operations teams struggled with deployment and scalability, resulting in low model availability during peak shopping seasons.

Solution

The company embarked on building a unified DevOps-MLOps pipeline by integrating Jenkins for CI/CD, Kubeflow for ML orchestration, and MLflow for model tracking. They automated data ingestion, continuous training triggered by fresh sales data, and deployed models into Kubernetes clusters that scaled automatically based on demand. Monitoring dashboards combined IT infrastructure health with model accuracy and drift metrics.

Outcome

- Deployment cycle time reduced from weeks to hours

- Model accuracy stabilized above 90%, improving recommendation quality

- Customer satisfaction and sales increased during critical marketing periods

- Collaboration between data science and engineering teams significantly improved, accelerating innovation

The Future of Unified Pipelines

As AI adoption grows, the convergence of DevOps and MLOps will deepen, leading to exciting trends.

AIOps for Intelligent Operations

Artificial Intelligence will increasingly manage IT operations and pipeline monitoring, predicting issues before they impact users.

Governance Embedded by Design

Compliance and ethical AI principles will be incorporated natively into pipelines, minimizing regulatory risks.

Generative AI Enhancements

Generative AI tools will assist in automatic code generation, testing, and pipeline optimization, accelerating development cycles further.

Conclusion

When DevOps Meets MLOps: Building Unified Pipelines is no longer a futuristic concept but a tactical imperative. Combining the strengths of both domains enables organizations to innovate faster, ensure higher reliability, and govern AI-powered applications effectively. As data and AI continue shaping business landscapes, unified pipelines will form the backbone of scalable, automated, and intelligent software delivery.

Frequently Asked Questions (FAQs)

What is the difference between DevOps and MLOps?

DevOps focuses on automating software applications’ development and deployment, while MLOps extends these principles to the machine learning lifecycle, including data and model management.

Why unify DevOps and MLOps pipelines?

Unified pipelines reduce development silos, accelerate the release of AI-powered features, improve monitoring, and ensure compliance across software and ML components.

What tools support unified pipelines?

Popular tools include Jenkins, GitLab CI/CD, Kubernetes, MLflow, Kubeflow, and Airflow. Cloud providers also offer integrated platforms like AWS SageMaker and Google Vertex AI.

What are common challenges in building unified pipelines?

Handling data complexity, bridging skill gaps, selecting compatible tools, and meeting governance requirements are key challenges.

Which industries benefit most from unified pipelines?

Retail, finance, healthcare, and manufacturing, where AI drives key applications like recommendations, fraud detection, diagnostics, and predictive maintenance.