ETL vs ELT isn’t just a technical preference; it is a strategic choice that shapes how quickly an organization learns, how safely it handles sensitive data, and how efficiently it spends on compute and storage. Because the sequence of steps determines where transformations run, the decision directly affects latency, governance, cost, staffing, and even culture. Consequently, leaders rarely succeed by mandating a single pattern everywhere. Instead, they match the pattern to each domain’s needs—using transform‑first ETL for pre‑load control and land‑first ELT for agility and near‑real‑time insight. Moreover, as cloud platforms continue to scale elastically, the economics that once favored ETL for everything have shifted, making ELT an attractive default for many analytics‑heavy workloads. Nevertheless, ETL still plays a vital role where strict curation must happen before data touches a shared platform.

This ETL vs ELT guide goes beyond slogans. It explains the mechanics, illustrates real‑world trade‑offs, and gives a concrete plan to pick, implement, and operate the right pattern per domain—while keeping costs predictable and data trustworthy.

Core Concepts—How ETL vs ELT Truly Differ

At a glance, both approaches move and shape data. However, the order of operations changes almost everything.

- ETL: Extract → Transform → Load

Teams pull data from sources, then run transformations on an external engine, and finally load curated results into the warehouse. As a result, the warehouse stores standardized tables by design, and consumers read from stable, minimized datasets. - ELT: Extract → Load → Transform

Teams land raw data quickly into a warehouse or lakehouse, and then run transformations in that platform. Therefore, analysts gain immediate access to raw history, and engineers iterate models rapidly as questions evolve.

Because ETL performs heavy lifting before the warehouse, it naturally supports strong pre‑load controls, such as tokenization, masking, and type enforcement. By contrast, ELT centralizes compute within the platform; consequently, it benefits from elastic scaling, vectorized execution, and columnar storage—making on‑demand modeling faster and cheaper than it once was. However, that convenience requires mature in‑platform governance so raw data doesn’t turn into a liability.

Why the Order Matters

The sequence establishes where quality checks, security controls, and compute costs live. With ETL, you purify data before loading, which simplifies access governance inside the warehouse because sensitive elements may never enter. With ELT, you land everything first and rely on platform‑level controls to restrict, mask, and audit usage. Consequently, ETL leans toward strong control and slower iteration, whereas ELT leans toward speed and flexible modeling. Neither is “right” universally; the domain decides.

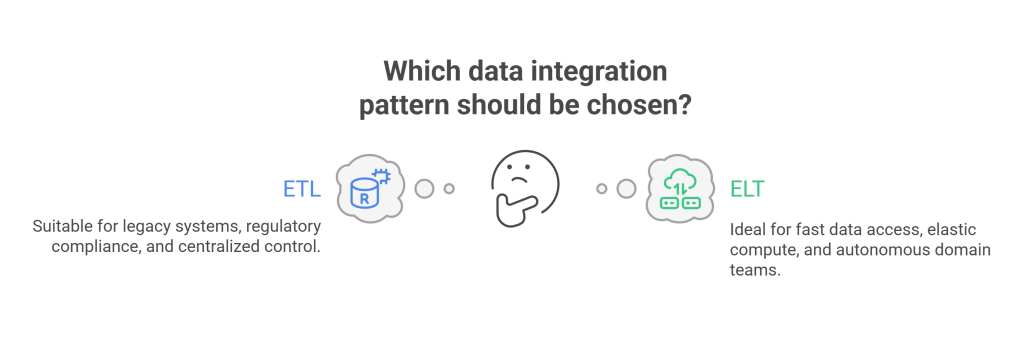

Where Each Pattern Excels

Different domains value different outcomes. Therefore, choose pattern by requirement, not by fashion.

- ETL excels when:

- Pre‑load de‑identification is mandatory due to policy or regulation.

- Ingested sources are legacy‑heavy and require complex standardization.

- Nightly or hourly batches are acceptable and predictability matters more than immediacy.

- Centralized control over schemas and data shapes reduces operational risk.

- ELT excels when:

- Time‑to‑data is crucial for product analytics, experimentation, or operational monitoring.

- Elastic compute and columnar storage make in‑platform transformations efficient.

- Layered modeling (raw → clean → curated) aligns with diverse consumers and fast iteration.

- Domain teams own data products and need autonomy to evolve models quickly.

Because most organizations include both high‑risk and fast‑moving domains, a hybrid approach usually delivers the best overall outcome.

Architecture at a Glance

It helps to visualize the flow.

- ETL Architecture

Sources → Staging/External Transform → Curated Warehouse Schemas

Therefore, transformation sits outside the warehouse and produces “ready‑to‑serve” tables. The warehouse handles consumption workloads with fewer surprises. - ELT Architecture

Sources → Raw Landing in Warehouse or Lakehouse → In‑Warehouse Transforms → Curated Layers

Consequently, the platform becomes the transformation engine. Raw data lands quickly, and models materialize as consumers need them. Governance and cost controls must keep pace with the speed.

Latency and Freshness

Freshness drives value for many analytics use cases. ETL introduces batch windows because transforms must complete before load. However, that determinism benefits financial close, regulatory reporting, and audit‑heavy contexts. ELT favors agility: raw data lands immediately, and transformations run frequently—sometimes continuously—delivering sub‑hour dashboards for product funnels, personalization, or anomaly detection. Therefore, use ETL for stability and ELT for speed.

Cost Models and FinOps Essentials

Neither pattern is automatically cheaper. Cost efficiency emerges from engineering discipline.

- ETL Costs

External transformation engines (or clusters) handle the heavy lifting; the warehouse stores curated outputs. Because upstream filtering trims payloads, warehouses may scan less. Nevertheless, capacity planning for external engines can create its own overhead. - ELT Costs

More compute shifts into the warehouse or lakehouse. Consequently, guardrails matter: isolate workloads (e.g., separate queues/warehouses), autosuspend idle engines, partition and cluster large tables, collect statistics, materialize incrementally, and archive old data to colder tiers. In addition, monitor ad hoc queries; exploratory scans can be expensive without limits.

In practice, the cheapest pipeline is the one you instrument, monitor, and continuously improve—regardless of acronym.

Data Quality—Avoiding Debt in Either Pattern

Quality debt compounds silently until it becomes expensive. ETL pays it up front; ELT pays inside the platform. Either way, plan to pay.

- For ETL

Enforce schema, range, and referential checks before load. Ensure business rules are deterministic and idempotent. Keep a staging area to replay loads when upstream sources change. - For ELT

Validate on landing (schema, basic profiling), then test transformations as code. Write unit‑style checks for curated models: non‑null constraints, uniqueness, accepted values, foreign key relationships, and row‑count thresholds. Fail fast and notify owners. - For Both

Adopt data contracts. Define schema, semantics, SLAs, ownership, change policy, and deprecation steps. Because contracts reduce “surprise changes,” they protect both producers and consumers.

Modeling Discipline

Dimensional modeling stabilizes BI. Wide feature tables speed ML. Layered lakehouse patterns—raw/bronze, clean/silver, curated/gold—support progressive refinement and traceability. Moreover, documentation matters: a short, accurate README and column‑level descriptions often save hours of guesswork. Trust grows when consumers can understand what they are using—and how it’s maintained.

Security and Compliance by Design

Security that arrives late arrives too late. Build it in from the start.

- ETL Approach

Mask or tokenize sensitive fields before load. Write only de‑identified data to the warehouse. Restrict staging zones and log every transform that touches protected attributes. - ELT Approach

Land raw data under strict controls: encryption in transit and at rest, granular RBAC/ABAC, dynamic column masking, row‑level filters, and comprehensive auditing. Rotate secrets, manage keys properly, and keep lineage verifiable. - Choosing Per Domain

When regulations or policies prohibit raw PII in shared platforms, ETL is the natural fit. When platform controls meet the bar, ELT can satisfy compliance while preserving agility. In either case, involve security and legal during design, not during the week of launch.

Team Skills and Operating Model

Structure follows strategy. ETL often sits with a centralized data engineering group that excels in mapping logic, schedulers, networking, and hybrid environments. ELT empowers analytics engineers closer to the business who write SQL models, add tests, and tune platform performance. Meanwhile, platform engineers ensure the warehouse or lakehouse scales safely and economically. Because domain ownership is on the rise, many organizations adopt a “hub‑and‑spoke” model: the hub operates shared standards, security, and sensitive ETL flows; the spokes own ELT pipelines for their data products.

Performance Tuning and Reliability

Small adjustments compound into big wins.

- ETL Tuning

Push filters and projections close to the source so you move less data. Partition large datasets for parallel processing. Cache dimension lookups rather than re‑querying. Right‑size external compute, then schedule heavy jobs off‑peak. Monitor end‑to‑end runtimes and identify hotspots, not just the slowest step. - ELT Tuning

Co‑locate compute with storage to avoid egress penalties. Use partition pruning, clustering, and statistics to reduce scans. Materialize frequently queried models; drop or demote stale ones. Schedule near demand windows to reduce perceived latency. Profile queries and fix anti‑patterns: SELECT *, cross joins without filters, and non‑selective predicates. - Reliability Patterns

Track freshness by domain, enforce data tests, and alert on failures. Watch schema drift and contract violations. Maintain incident runbooks. Practice recovery drills. Reliability grows when everyone knows what “normal” looks like—and what to do when it isn’t.

Decision Matrix—How to Choose Quickly and Correctly

When to choose ETL :

- Pre‑load de‑identification is mandatory.

- Stable nightly or hourly batches meet business needs.

- Legacy sources dominate, and schema change is rare.

When to choose ETL :

- Sub‑hour freshness and exploratory analysis create advantage.

- The platform provides robust governance and elastic scaling.

- Teams embrace layered modeling and tests‑as‑code.

Hybrid—best fit :

- Domains diverge in risk, speed, or ownership.

- You’re migrating gradually from on‑prem to cloud.

- Centralized governance must coexist with domain autonomy.

Case Study (Anonymized)—From Stale to Real‑Time Without Losing Control

Background

A mid‑market subscription business reported results 24–48 hours late. Marketing wanted same‑day campaign feedback. Product managers demanded live conversion funnels. Finance required airtight controls for billing and refunds.

Approach

The team refused a one‑size‑fits‑all decision and split by domain. Finance and compliance‑sensitive feeds remained on ETL to guarantee tokenization and deterministic batch windows. Meanwhile, product analytics moved to ELT. Raw app events landed in the lakehouse every five minutes. SQL models created user sessions, funnels, and attribution views; tests covered row counts, non‑nulls, uniqueness, and referential integrity. Role‑based access, dynamic masking, and row‑level policies protected sensitive flags. On the cost side, workload isolation, autosuspend, incremental materializations, and regular pruning kept spend in check.

Outcome

Dashboards refreshed every 10–15 minutes. Marketing adjusted campaigns daily instead of weekly. Finance preserved audit‑ready curation with fewer reconciliation issues, because upstream validation improved. Costs leveled as engineers clustered large tables and removed cold models. Most importantly, trust increased: the business could see what changed, when it changed, and who owned it.

CDC and Schema Evolution—Handling Change Without Chaos

Change Data Capture (CDC) and schema evolution routinely break pipelines when ignored.

- CDC in ETL

External engines read logs or timestamps, transform deltas, and write merged outputs. Because transformations occur pre‑load, late‑arriving updates can be applied before the warehouse sees them. However, the pipeline complexity rises with each source system. - CDC in ELT

Raw change events land quickly, then merge operations run in the warehouse. Consequently, teams can re‑run merges as rules evolve. Nevertheless, merges can be compute‑intensive; partition wisely and schedule thoughtfully. - Schema Evolution

In ETL, strict typing up front prevents “garbage in” but can cause failures when a source adds a column unexpectedly. In ELT, raw landing absorbs new columns easily, although downstream models must adapt. Therefore, monitor for structural drift and update contracts promptly.

Streaming vs. Micro‑Batch—Choosing the Right Ingestion Style

Not every use case requires full streaming.

- Streaming

Choose when events drive decisions that cannot wait—fraud detection, real‑time personalization, industrial telemetry alerts. Streaming pairs naturally with ELT since raw events land continuously. - Micro‑Batch

For most analytics, micro‑batches of 1–10 minutes strike the right balance. They simplify exactly‑once semantics and reduce operational complexity while keeping dashboards fresh. - Classic Batch

Use for stable reporting where next‑day freshness suffices and simplicity helps reliability.

A 30‑Day Rollout Plan—From Decision to Demonstrated Value

Week 1: Baseline and Prioritize

- Map domains, SLAs, and sensitivity levels.

- Measure current freshness, failure rates, and cost by workload.

- Select one domain with obvious freshness pain to pilot ELT; keep sensitive domains on ETL.

Next : Contracts and Security

- Draft data contracts (schema, semantics, SLAs, owners, change policy).

- Define security defaults—encryption, RBAC/ABAC, masking, and auditing.

- Provision isolated compute for modeling and ad hoc users.

Then : Build and Test

- Land raw data (batch or micro‑batch).

- Implement layered models (raw → clean → curated).

- Add tests (non‑null, uniqueness, accepted values, referential integrity, and row counts).

- Wire alerts and dashboards for freshness and failures.

Week 4: Optimize and Show Value

- Materialize incrementally; partition and cluster large tables.

- Prune unneeded models; set retention policies.

- Release a business‑facing dashboard with freshness SLAs.

- Document the runbook and capture before/after metrics (freshness, time saved, cost trend).

KPIs That Prove Impact

- Freshness by domain (minutes/hours vs. SLA).

- Failed tests per run and mean time to resolve.

- 95th‑percentile query runtime for key models.

- Cost per curated table and cost per 1,000 queries.

- Incidents per quarter and mean time to recovery.

- Adoption metrics (active users, queries, dependency depth).

- Coverage (percentage of models with owners and current documentation).

- Contract health (on‑time updates when sources change).

Common Pitfalls—and Practical Fixes

- “Raw Forever” in ELT

Fix with contracts, ownership, tests, and lifecycle rules. Retire stale models on a schedule and publish a catalog with clear “gold” tables. - Over‑Curation in ETL

Provide an ELT sandbox so analysts can explore without waiting for entire chains to be re‑tooled. Snapshot outputs to avoid destabilizing downstream users. - Unmanaged Costs

Isolate workloads, autosuspend engines, partition effectively, cluster selectively, and materialize incrementally. Publish cost dashboards so teams self‑correct. - Security Added Late

Run design reviews early; test masking, roles, and row policies; and audit regularly. Rotate credentials and minimize standing privileges. - No Single Source of Truth

Declare canonical curated models per domain. Document semantics, owners, and SLAs; point BI layers and ML features at these “gold” sources.

Migration Path—ETL‑Only to Hybrid or ELT‑First

- Stabilize and Measure

Instrument current pipelines; capture freshness, failure, and cost baselines. Fix the worst reliability issues first. - Pilot ELT Where It Matters

Pick one domain with obvious business pain; land data quickly, model in layers, and add tests. Report results. - Standardize the Way of Working

Publish contracts, naming, lifecycle, and security defaults. Establish code review rules for curated layers. - Optimize and Scale

Add FinOps controls, consolidate overlapping models, expand self‑service with curated semantic layers. - Review and Iterate Quarterly

Compare domain outcomes to baselines; double down where gains are clear and adjust where friction persists.

Conclusion

ETL vs ELT is not a rivalry; it is a portfolio. Use ETL where pre‑load control and deterministic curation protect the business. Use ELT where speed to insight and iterative modeling create advantage. Adopt a hybrid where domains diverge—and expect them to. Then, pair the chosen pattern with the fundamentals that make pipelines reliable: contracts, tests, observability, security‑by‑design, and FinOps discipline. When you do, your data flows faster, costs stay predictable, and the organization learns at the speed your market demands.

FAQs

Is ETL obsolete in the cloud era?

Absolutely not. ETL remains essential where pre‑load de‑identification is mandatory, where deterministic batches build trust, and where legacy systems make transform‑first simpler.

Does ELT always deliver faster insights?

Often yes; however, ELT only stays fast when governed. Contracts, tests, ownership, and FinOps keep speed from turning into chaos or cost sprawl.

Can one team operate both patterns effectively?

Yes—if responsibilities are explicit. Many organizations centralize sensitive ETL while domain teams own ELT data products and downstream models.

How do we control ELT costs as adoption grows?

Isolate workloads, autosuspend idle compute, partition and cluster wisely, materialize incrementally, archive cold data, and publish cost dashboards with shared targets.

How do we control ELT costs as adoption grows?

Isolate workloads, autosuspend idle compute, partition and cluster wisely, materialize incrementally, archive cold data, and publish cost dashboards with shared targets.