Introduction to Big Data Observability

In today’s data-driven landscape, Big Data Observability and cost optimization are not just desirable—they are essential for businesses aiming to stay competitive. As organizations increasingly rely on large-scale analytics, the ability to monitor data workflows effectively and manage cloud data costs becomes paramount. Therefore, this article explores the significance of observability, its key use cases, and the tools that facilitate these processes, ensuring a comprehensive understanding for professionals and decision-makers alike.

Moreover, as digital ecosystems grow more complex, the need for real-time visibility, predictive insights, and actionable intelligence becomes increasingly critical. Hence, businesses must invest in observability solutions that not only track performance but also uncover inefficiencies and opportunities for cost savings.

Why Big Data Observability Matters

The Strategic Necessity of Observability

To begin with, Big Data Observability is not merely a technical requirement—it’s a strategic necessity. With the exponential growth of data, organizations encounter various challenges in ensuring that their data pipelines operate smoothly. Therefore, by implementing robust observability practices, companies can detect issues early, allocate costs effectively, and optimize resource usage.

In addition, observability supports cross-functional collaboration. For instance, it enables IT teams, data engineers, and finance stakeholders to align around shared performance and cost metrics, which in turn strengthens business agility and accountability.

The Importance of Monitoring Data Workflows

Furthermore, monitoring data workflows allows businesses to identify bottlenecks and failures in real time. For example, if a data pipeline fails, the repercussions can be significant—delayed insights, customer dissatisfaction, and increased operational costs. Consequently, effective observability enables teams to act swiftly to resolve issues, minimizing downtime and ensuring smooth data operations.

Not only does continuous monitoring build confidence among stakeholders, but it also enhances data accuracy, supports regulatory compliance, and encourages timely decision-making.

The Business Impact of Poor Observability

On the other hand, failure to implement proper observability can lead to several negative consequences, including:

- Increased Downtime: Without real-time monitoring, issues can go unnoticed, resulting in prolonged downtime and service interruptions.

- Data Quality Issues: Unmonitored pipelines can produce inaccurate or incomplete data, compromising the integrity of analytics.

- Escalating Costs: Inefficient resource usage can lead to ballooning cloud expenses, ultimately affecting the bottom line.

- Missed Opportunities: Delays in data processing can result in missed business opportunities and slower responses to market changes.

Thus, organizations must treat observability as an ongoing investment rather than a one-time deployment.

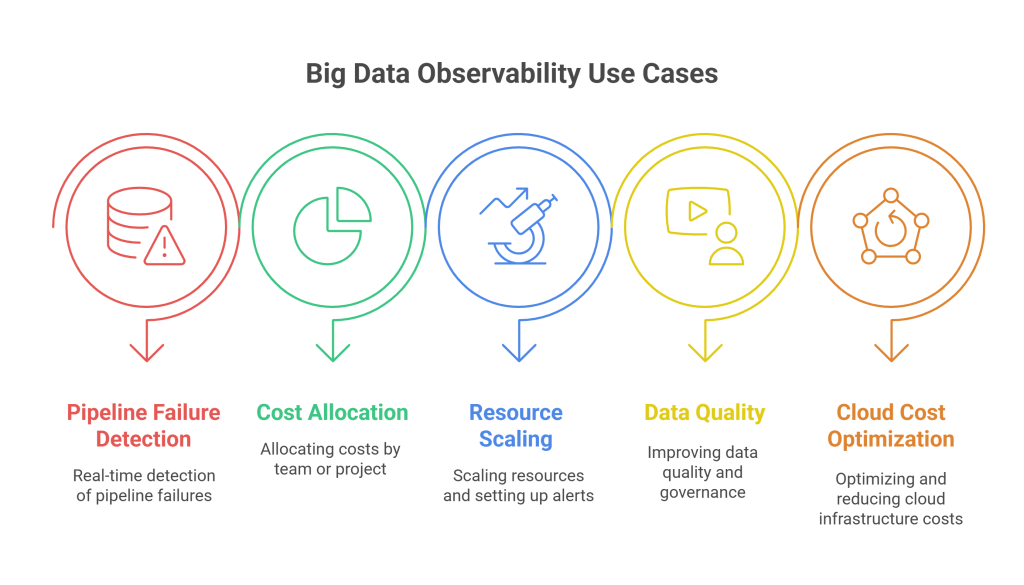

Key Use Cases of Big Data Observability

1. Detecting Pipeline Failures in Real-Time

First and foremost, real-time failure detection is vital for maintaining the integrity of data pipelines. By utilizing advanced monitoring tools, organizations can receive immediate alerts when something goes wrong. This proactive approach allows teams to address issues before they escalate, ultimately saving both time and resources.

Example Scenario:

A financial services company relies on real-time data for fraud detection. If their data pipeline experiences a failure, fraudulent transactions may go undetected, leading to significant financial losses. Thus, by implementing observability tools, they can quickly identify and rectify failures, ensuring continuous data flow.

In essence, real-time observability functions as an early warning system that protects both revenue and reputation.

2. Cost Allocation per Team/Project

Secondly, as companies scale, understanding who is utilizing data resources becomes essential. Big Data Observability enables organizations to allocate costs accurately to specific teams or projects. This transparency fosters accountability and encourages teams to optimize their resource usage.

Benefits:

- Informed Decision-Making: Analyze the cost-effectiveness of data projects for better budgetary decisions.

- Encouraged Efficiency: Awareness of spending prompts teams to seek cost-effective solutions.

Additionally, granular cost allocation allows finance teams to forecast expenditures more accurately, leading to better planning and reduced waste.

3. Resource Scaling and Alerting

In addition to cost tracking, effective resource scaling is pivotal in managing cloud costs. By employing observability practices, businesses can monitor usage patterns and scale resources accordingly. Additionally, alerting systems can notify teams of unusual activity, allowing for timely adjustments and preventing overspending.

Scaling Strategies:

- Auto-Scaling: Adjust resources based on real-time demand.

- Scheduled Scaling: Align resource usage with predictable workload patterns.

Moreover, predictive analytics can anticipate surges in usage, enabling preemptive scaling that avoids performance bottlenecks and budget overruns.

4. Enhancing Data Quality and Governance

Simultaneously, ensuring data quality is crucial for accurate analytics. Observability tools can help track data integrity and lineage, identifying where errors occur and enabling teams to address them. This supports both analytics quality and regulatory compliance.

Data Governance Practices:

- Data Lineage Tracking: Identify the origin and flow of data to enhance trust.

- Automated Quality Checks: Ensure high-quality data through automated validations.

In tandem with compliance mandates like GDPR or HIPAA, data governance supported by observability ensures responsible and secure data usage.

5. Optimizing Cloud Costs

Another critical area is cost management. Cloud costs can quickly spiral if not managed effectively. Big Data Observability allows organizations to track spending in real time, identify waste, and optimize resource allocation.

Cost Optimization Techniques:

- Right-Sizing: Adjust cloud resources to meet actual usage needs.

- Usage Reporting: Enable smarter decision-making through regular usage reports.

Ultimately, combining observability with FinOps principles empowers organizations to create a culture of cost accountability.

Tools for Big Data Observability and Cost Optimization

Monte Carlo

To start, Monte Carlo provides a comprehensive suite for monitoring data pipelines, ensuring reliability and availability. Real-time alerts help teams address issues quickly.

Key Features:

- Automated anomaly detection

- Downstream impact analysis

Monte Carlo’s proactive monitoring significantly reduces data downtime and boosts operational confidence.

Datadog for Data Pipelines

Next, Datadog integrates with data pipelines to provide performance insights and real-time alerting.

Benefits:

- Custom dashboards

- Seamless integration with multiple platforms

Moreover, Datadog’s open APIs allow easy integration into CI/CD pipelines, enabling continuous observability.

CloudZero

Furthermore, CloudZero helps organizations track and manage cloud spending with detailed team-level insights.

Features:

- Real-time cost monitoring

- Team-based usage tracking

Its detailed visualizations make it easier for teams to identify cost-saving opportunities without sacrificing performance.

FinOps Practices

In parallel, FinOps fosters collaboration between finance, engineering, and product teams to manage cloud costs.

Implementation Tips:

- Cross-functional collaboration

- Regular spending reviews

As a practice, FinOps complements observability by ensuring cost visibility and enabling agile financial decisions.

Case Study: Implementing Big Data Observability

Overview:

A leading e-commerce company faced challenges with frequent pipeline failures and high cloud costs.

Implementation:

They adopted Monte Carlo and Datadog for real-time monitoring and introduced team-level cost allocation. In addition, they trained their teams to interpret observability metrics and align them with operational goals.

Results:

- 40% reduction in pipeline failures

- 30% decrease in cloud costs

- Enhanced data quality and improved team collaboration

Lessons Learned:

- Invest in team training and onboarding

- Continuously refine observability strategies

- Align observability metrics with business KPIs

This case underscores how integrated observability and cost governance can drive measurable business outcomes while fostering a culture of accountability and innovation.

Challenges in Implementing Big Data Observability

1. Data Silos

Disparate systems hinder unified observability.

Solutions:

- Use unified platforms

- Promote cross-department collaboration

2. Lack of Skilled Personnel

Skilled professionals are crucial for effective implementation.

Solutions:

- Train existing employees

- Hire specialized experts

3. Resistance to Change

Cultural resistance can slow down adoption.

Solutions:

- Use change management strategies

- Start with pilot projects

4. Complexity of Data Environments

Hybrid environments increase monitoring complexity.

Solutions:

- Employ advanced tools

- Standardize data operations

5. Budget Constraints

Moreover, financial constraints can limit the scope of observability initiatives.

Solutions:

- Prioritize high-impact areas

- Leverage open-source or low-cost tools

To overcome these challenges, companies must blend technology, training, and strategy to create a scalable observability model that evolves with their needs.

Future Trends in Big Data Observability

- AI and ML Integration: Enhance pattern detection, automate root cause analysis, and deliver predictive analytics.

- Enhanced Automation: Reduce manual oversight with self-healing systems and smart alerts.

- DevOps Integration: Enable seamless integration with development pipelines, accelerating deployments.

- Data Privacy Focus: Ensure compliance with increasing regulations such as GDPR, HIPAA, and CCPA.

- Custom Dashboards: Offer greater personalization, allowing each team to monitor what matters most.

- Edge Observability: As edge computing grows, monitoring decentralized data becomes crucial.

- Unified Data Ops Platforms: All-in-one solutions will simplify management and reduce tool sprawl.

As these trends unfold, observability will become more intelligent, user-centric, and aligned with business value.

Conclusion

In conclusion, Big Data Observability and cost optimization are foundational to a successful analytics strategy. By deploying the right tools and embracing best practices, organizations can improve efficiency, reduce costs, and build a culture of accountability. As challenges evolve, so must observability strategies—requiring constant learning, collaboration, and adaptation.

Ultimately, success lies in a proactive, well-integrated approach that combines technology with people and processes. By doing so, enterprises not only unlock the full value of their data but also ensure sustainable growth in an increasingly data-centric world.